简介

使用HuggingFace Transformers示例(the HuggingFace Transformers examples)中的BERT模型,在BERT模型上应用动态量化。通过这一步一步的过程,演示如何将BERT转换为动态量化模型。

- BERT (Bidirectional Embedding Representations from Transformers)是一种预训练语言表示的方法,它在许多流行的自然语言处理(NLP)任务上取得了最先进的精度结果,如问答、文本分类等。原始论文BERT。

- PyTorch 中的动态量化支持将浮点模型转换为量化模型,其中权重为静态 int8 或 float16 数据类型,激活为动态量化。当权重量化为 int8 时,激活被动态量化(每batch)为 int8。在PyTorch中,torch.quantization.quantize_dynamic API,将指定的模块替换为动态仅权重量化的版本,并输出量化模型。

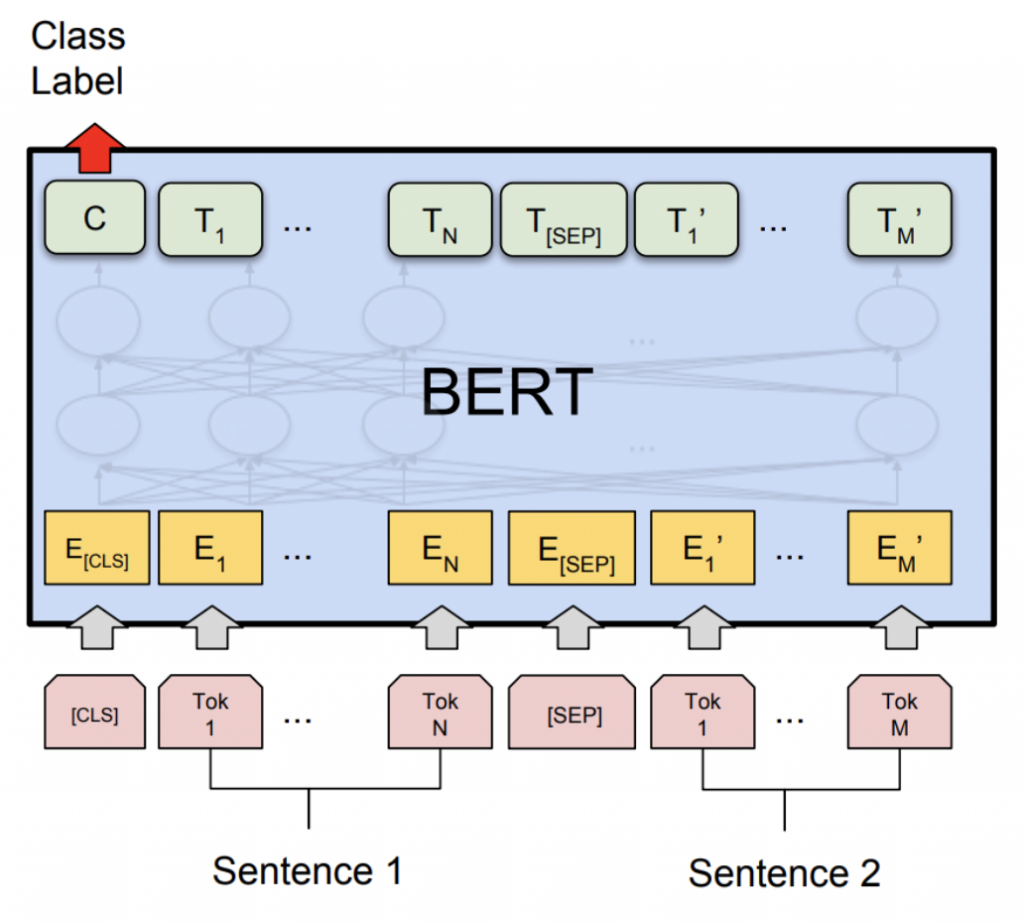

- 在通用语言理解评估基准(GLUE)中的Microsoft Research Paraphrase Corpus (MRPC) task上展示了准确性和推理性能结果。MRPC (Dolan and Brockett, 2005)是一个自动从在线新闻源中提取的句子对语料库,并配有人工标注,以判断句子对中的句子是否语义等价。由于类别是不平衡的(正样本为68%,负样本为32%),通常的做法是使用F1指标评估。MRPC是语言对分类的一个常见NLP任务,如下图所示。

安装

安装PyTorch和HuggingFace Transformers

首先按照 PyTorch 和 HuggingFace Github Repo 中的安装说明进行操作,也可以参考之前的博客《PyTorch安装》。此外,还安装了scikit-learn包,使用其内置的 F1 分数计算辅助函数。

pip install sklearn

pip install transformers导入必要的模块

from __future__ import absolute_import, division, print_function

import logging

import numpy as np

import os

import random

import sys

import time

import torch

from argparse import Namespace

from torch.utils.data import (DataLoader, RandomSampler, SequentialSampler,

TensorDataset)

from tqdm import tqdm

from transformers import (BertConfig, BertForSequenceClassification, BertTokenizer,)

from transformers import glue_compute_metrics as compute_metrics

from transformers import glue_output_modes as output_modes

from transformers import glue_processors as processors

from transformers import glue_convert_examples_to_features as convert_examples_to_features

# Setup logging

logger = logging.getLogger(__name__)

logging.basicConfig(format = '%(asctime)s - %(levelname)s - %(name)s - %(message)s',

datefmt = '%m/%d/%Y %H:%M:%S',

level = logging.WARN)

logging.getLogger("transformers.modeling_utils").setLevel(

logging.WARN) # Reduce logging

print(torch.__version__)设置线程数来比较FP32和INT8性能之间的单线程性能。

torch.set_num_threads(1)

print(torch.__config__.parallel_info())helper函数

transformers库内置了辅助函数。主要使用以下辅助函数:一个用于将文本示例转换为特征向量;另一个用于测量预测结果的F1分数。

glue_convert_examples_to_features函数将文本转换为输入特征:

- 标记输入序列;

- 在开头插入[CLS];

- 在第一句和第二句之间,以及最后插入[SEP];

- 生成 token type ids 来指示一个 token 是属于第一个序列还是属于第二个序列。

glue_compute_metrics函数可以计算指标的F1分数,它可以解释为准确率和召回率的加权平均值,其中F1分数在1时达到最佳值,在0时达到最差值。精确率和召回率对F1分数的相对贡献是相等的。

下载数据集

在运行MRPC任务之前,先运行脚本download_glue_data.py下载GLUE data ,然后解压到glue_data目录下。

目前的download_glue_data.py文件会报错加入下面三行代码即可(参考原文评论区中的bug修复)

import ioURLLIB = urllib.request'MRPC':'https://raw.githubusercontent.com/MegEngine/Models/master/official/nlp/bert/glue_data/MRPC/dev_ids.tsv'inside theTASK2PATHdict

python download_glue_data.py --data_dir='glue_data' --tasks='MRPC'微调BERT模型

将使用预训练的BERT模型进行微调,在MRPC任务上对语义等价的句子对进行分类。为MRPC任务微调的预训练BERT模型(HuggingFace transformers中的bert-base-uncase模型),https://github.com/huggingface/transformers/tree/main/examples#mrpc

export GLUE_DIR=./glue_data

export TASK_NAME=MRPC

export OUT_DIR=./$TASK_NAME/

python ./run_glue.py \

--model_type bert \

--model_name_or_path bert-base-uncased \

--task_name $TASK_NAME \

--do_train \

--do_eval \

--do_lower_case \

--data_dir $GLUE_DIR/$TASK_NAME \

--max_seq_length 128 \

--per_gpu_eval_batch_size=8 \

--per_gpu_train_batch_size=8 \

--learning_rate 2e-5 \

--num_train_epochs 3.0 \

--save_steps 100000 \

--output_dir $OUT_DIR针对MRPC任务,微调BERT模型。为了节省时间,可以将模型文件(约400 MB)直接下载到本地文件夹$OUT_DIR中。

设置全局配置

设置用于在动态量化前后评估微调BERT模型的全局配置。

configs = Namespace()

# The output directory for the fine-tuned model, $OUT_DIR.

configs.output_dir = "./MRPC/"

# The data directory for the MRPC task in the GLUE benchmark, $GLUE_DIR/$TASK_NAME.

configs.data_dir = "./glue_data/MRPC"

# The model name or path for the pre-trained model.

configs.model_name_or_path = "bert-base-uncased"

# The maximum length of an input sequence

configs.max_seq_length = 128

# Prepare GLUE task.

configs.task_name = "MRPC".lower()

configs.processor = processors[configs.task_name]()

configs.output_mode = output_modes[configs.task_name]

configs.label_list = configs.processor.get_labels()

configs.model_type = "bert".lower()

configs.do_lower_case = True

# Set the device, batch size, topology, and caching flags.

configs.device = "cpu"

configs.per_gpu_eval_batch_size = 8

configs.n_gpu = 0

configs.local_rank = -1

configs.overwrite_cache = False

# Set random seed for reproducibility.

def set_seed(seed):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

set_seed(42)加载微调的BERT模型

从config .output_dir加载tokenizer和经过微调的BERT序列分类器模型(FP32)。

tokenizer = BertTokenizer.from_pretrained(

configs.output_dir, do_lower_case=configs.do_lower_case)

model = BertForSequenceClassification.from_pretrained(configs.output_dir)

model.to(configs.device)定义tokenize和evaluation函数

使用了Huggingface的tokenize和evaluation函数

def evaluate(args, model, tokenizer, prefix=""):

# Loop to handle MNLI double evaluation (matched, mis-matched)

eval_task_names = ("mnli", "mnli-mm") if args.task_name == "mnli" else (args.task_name,)

eval_outputs_dirs = (args.output_dir, args.output_dir + '-MM') if args.task_name == "mnli" else (args.output_dir,)

results = {}

for eval_task, eval_output_dir in zip(eval_task_names, eval_outputs_dirs):

eval_dataset = load_and_cache_examples(args, eval_task, tokenizer, evaluate=True)

if not os.path.exists(eval_output_dir) and args.local_rank in [-1, 0]:

os.makedirs(eval_output_dir)

args.eval_batch_size = args.per_gpu_eval_batch_size * max(1, args.n_gpu)

# Note that DistributedSampler samples randomly

eval_sampler = SequentialSampler(eval_dataset) if args.local_rank == -1 else DistributedSampler(eval_dataset)

eval_dataloader = DataLoader(eval_dataset, sampler=eval_sampler, batch_size=args.eval_batch_size)

# multi-gpu eval

if args.n_gpu > 1:

model = torch.nn.DataParallel(model)

# Eval!

logger.info("***** Running evaluation {} *****".format(prefix))

logger.info(" Num examples = %d", len(eval_dataset))

logger.info(" Batch size = %d", args.eval_batch_size)

eval_loss = 0.0

nb_eval_steps = 0

preds = None

out_label_ids = None

for batch in tqdm(eval_dataloader, desc="Evaluating"):

model.eval()

batch = tuple(t.to(args.device) for t in batch)

with torch.no_grad():

inputs = {'input_ids': batch[0],

'attention_mask': batch[1],

'labels': batch[3]}

if args.model_type != 'distilbert':

inputs['token_type_ids'] = batch[2] if args.model_type in ['bert', 'xlnet'] else None # XLM, DistilBERT and RoBERTa don't use segment_ids

outputs = model(**inputs)

tmp_eval_loss, logits = outputs[:2]

eval_loss += tmp_eval_loss.mean().item()

nb_eval_steps += 1

if preds is None:

preds = logits.detach().cpu().numpy()

out_label_ids = inputs['labels'].detach().cpu().numpy()

else:

preds = np.append(preds, logits.detach().cpu().numpy(), axis=0)

out_label_ids = np.append(out_label_ids, inputs['labels'].detach().cpu().numpy(), axis=0)

eval_loss = eval_loss / nb_eval_steps

if args.output_mode == "classification":

preds = np.argmax(preds, axis=1)

elif args.output_mode == "regression":

preds = np.squeeze(preds)

result = compute_metrics(eval_task, preds, out_label_ids)

results.update(result)

output_eval_file = os.path.join(eval_output_dir, prefix, "eval_results.txt")

with open(output_eval_file, "w") as writer:

logger.info("***** Eval results {} *****".format(prefix))

for key in sorted(result.keys()):

logger.info(" %s = %s", key, str(result[key]))

writer.write("%s = %s\n" % (key, str(result[key])))

return results

def load_and_cache_examples(args, task, tokenizer, evaluate=False):

if args.local_rank not in [-1, 0] and not evaluate:

torch.distributed.barrier() # Make sure only the first process in distributed training process the dataset, and the others will use the cache

processor = processors[task]()

output_mode = output_modes[task]

# Load data features from cache or dataset file

cached_features_file = os.path.join(args.data_dir, 'cached_{}_{}_{}_{}'.format(

'dev' if evaluate else 'train',

list(filter(None, args.model_name_or_path.split('/'))).pop(),

str(args.max_seq_length),

str(task)))

if os.path.exists(cached_features_file) and not args.overwrite_cache:

logger.info("Loading features from cached file %s", cached_features_file)

features = torch.load(cached_features_file)

else:

logger.info("Creating features from dataset file at %s", args.data_dir)

label_list = processor.get_labels()

if task in ['mnli', 'mnli-mm'] and args.model_type in ['roberta']:

# HACK(label indices are swapped in RoBERTa pretrained model)

label_list[1], label_list[2] = label_list[2], label_list[1]

examples = processor.get_dev_examples(args.data_dir) if evaluate else processor.get_train_examples(args.data_dir)

features = convert_examples_to_features(examples,

tokenizer,

label_list=label_list,

max_length=args.max_seq_length,

output_mode=output_mode,

pad_on_left=bool(args.model_type in ['xlnet']), # pad on the left for xlnet

pad_token=tokenizer.convert_tokens_to_ids([tokenizer.pad_token])[0],

pad_token_segment_id=4 if args.model_type in ['xlnet'] else 0,

)

if args.local_rank in [-1, 0]:

logger.info("Saving features into cached file %s", cached_features_file)

torch.save(features, cached_features_file)

if args.local_rank == 0 and not evaluate:

torch.distributed.barrier() # Make sure only the first process in distributed training process the dataset, and the others will use the cache

# Convert to Tensors and build dataset

all_input_ids = torch.tensor([f.input_ids for f in features], dtype=torch.long)

all_attention_mask = torch.tensor([f.attention_mask for f in features], dtype=torch.long)

all_token_type_ids = torch.tensor([f.token_type_ids for f in features], dtype=torch.long)

if output_mode == "classification":

all_labels = torch.tensor([f.label for f in features], dtype=torch.long)

elif output_mode == "regression":

all_labels = torch.tensor([f.label for f in features], dtype=torch.float)

dataset = TensorDataset(all_input_ids, all_attention_mask, all_token_type_ids, all_labels)

return dataset应用动态量化

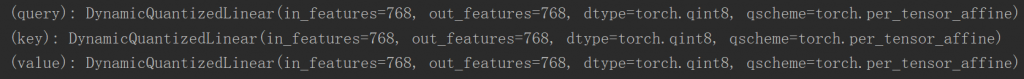

调用为torch.quantization.quantize_dynamic对模型进行量化,对HuggingFace BERT模型进行动态量化。具体地说:1)指定模型中的torch.nn.Linear模块被量化;2)指定要将权重转换为量化的int8值。

quantized_model = torch.quantization.quantize_dynamic(

model, {torch.nn.Linear}, dtype=torch.qint8

)

print(quantized_model)部分输出如下:

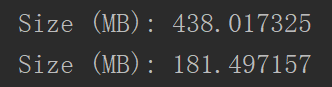

检查模型尺寸

先检查一下模型大小。可以观察到模型大小的显著减少(FP32总大小:438 MB;INT8总大小:181 MB)

def print_size_of_model(model):

torch.save(model.state_dict(), "temp.p")

print('Size (MB):', os.path.getsize("temp.p")/1e6)

os.remove('temp.p')

print_size_of_model(model)

print_size_of_model(quantized_model)

使用的BERT模型(bert-base-uncased)的词汇表大小V为30522。当嵌入(embedding)大小为768时,词嵌入表的总大小约为4 (Bytes/FP32)* 30522 * 768 = 90 MB,因此借助量化,将非嵌入表部分的模型大小从350 MB (FP32模型)减小到90 MB (INT8模型)。

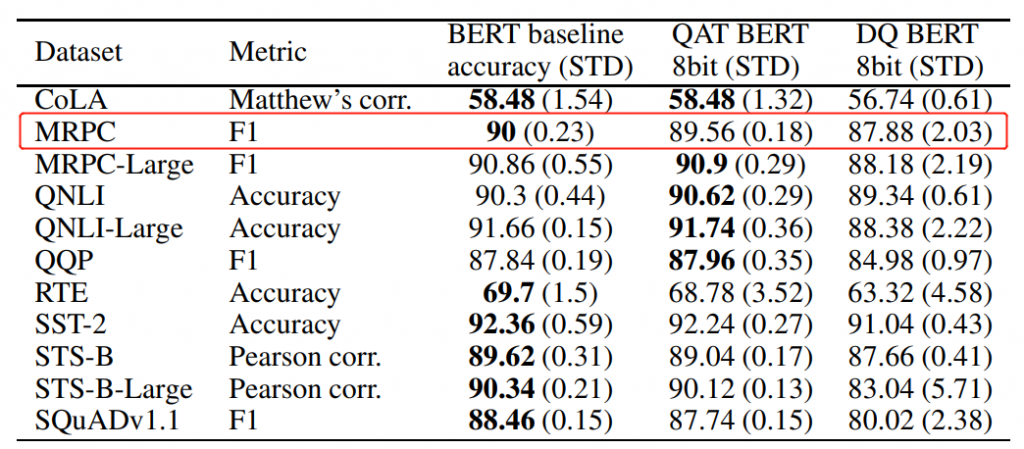

在MRPC任务上对微调后的BERT模型应用训练后动态量化后,我们的F1分数准确率降低了0.6%。作为对比,在论文Q8BERT: Quantized 8Bit BERT(表1)中,通过应用训练后动态量化,达到了0.8788,通过应用量化感知训练,达到了0.8956。主要的区别是torch支持PyTorch中的非对称量化,而论文Q8BERT只支持对称量化。

为了进行单线程比较,将线程数设置为1。还支持这些量化的INT8运算符的操作内并行化。可以通过 torch.set_num_threads(N) 设置多线程(N是intra-op并行化线程的数量)。启用intra-op并行化支持的一个初步要求是使用正确的后端,如OpenMP、Native或TBB构建PyTorch。可以使用 torch.__config__.parallel_info() 来检查并行化设置。

评估推理的准确性和时间

接下来,比较原始FP32模型和动态量化后的INT8模型的推理时间和评估精度。

def time_model_evaluation(model, configs, tokenizer):

eval_start_time = time.time()

result = evaluate(configs, model, tokenizer, prefix="")

eval_end_time = time.time()

eval_duration_time = eval_end_time - eval_start_time

print(result)

print("Evaluate total time (seconds): {0:.1f}".format(eval_duration_time))

# Evaluate the original FP32 BERT model

time_model_evaluation(model, configs, tokenizer)

# Evaluate the INT8 BERT model after the dynamic quantization

time_model_evaluation(quantized_model, configs, tokenizer)| Prec | F1 score | Model Size | 1 thread | 4 threads |

| FP32 | 0.9019 | 438 MB | 94.9 | 22.2 |

| INT8 | 0.8977 | 181 MB | 49.4 | 12.7 |

序列化量化模型

在跟踪模型之后,可以使用torch.jit.save序列化并保存量化模型以供将来使用。

input_ids = ids_tensor([8, 128], 2)

token_type_ids = ids_tensor([8, 128], 2)

attention_mask = ids_tensor([8, 128], vocab_size=2)

dummy_input = (input_ids, attention_mask, token_type_ids)

traced_model = torch.jit.trace(quantized_model, dummy_input)

torch.jit.save(traced_model, "bert_traced_eager_quant.pt")要加载量化模型,可以使用torch.jit.load

loaded_quantized_model = torch.jit.load("bert_traced_eager_quant.pt")